The Coding Agent Metagame

There's an endless debate around coding agents. What's the best way to prompt? How about reviewing the outputs? Are the results garbage, and do agents really waste more time in the end?

But the one thing that all my engineering friends seem to agree on is that "coding today feels much more fun". And the majority of them point to the same tool: Claude Code. 1

After working on Codex at OpenAI 2, I wanted to do a closer study of Claude Code to understand exactly what makes developers so excited about it.

Initially, I was very skeptical about using a CLI tool to manage coding edits. My entire programming career has been IDE-centric. But after using Claude Code for a few weeks, it's hard not to feel that sense of fun.

I could say many great things about the way Claude Code is built–but the most underrated aspect is the feeling that you can endlessly hack it to your liking.

Using Claude Code feels more like playing the piano than shuffling tickets in Jira. I get the vague sense that by using the tool differently, I could become a virtuoso.

There's not just the game of writing code, but a metagame that we're all playing by using it in weird and wonderful ways.

A retro gaming aesthetic

When I first ran claude, I was quickly struck by 1) the attention to detail and 2) the inspiration from old-school video games.

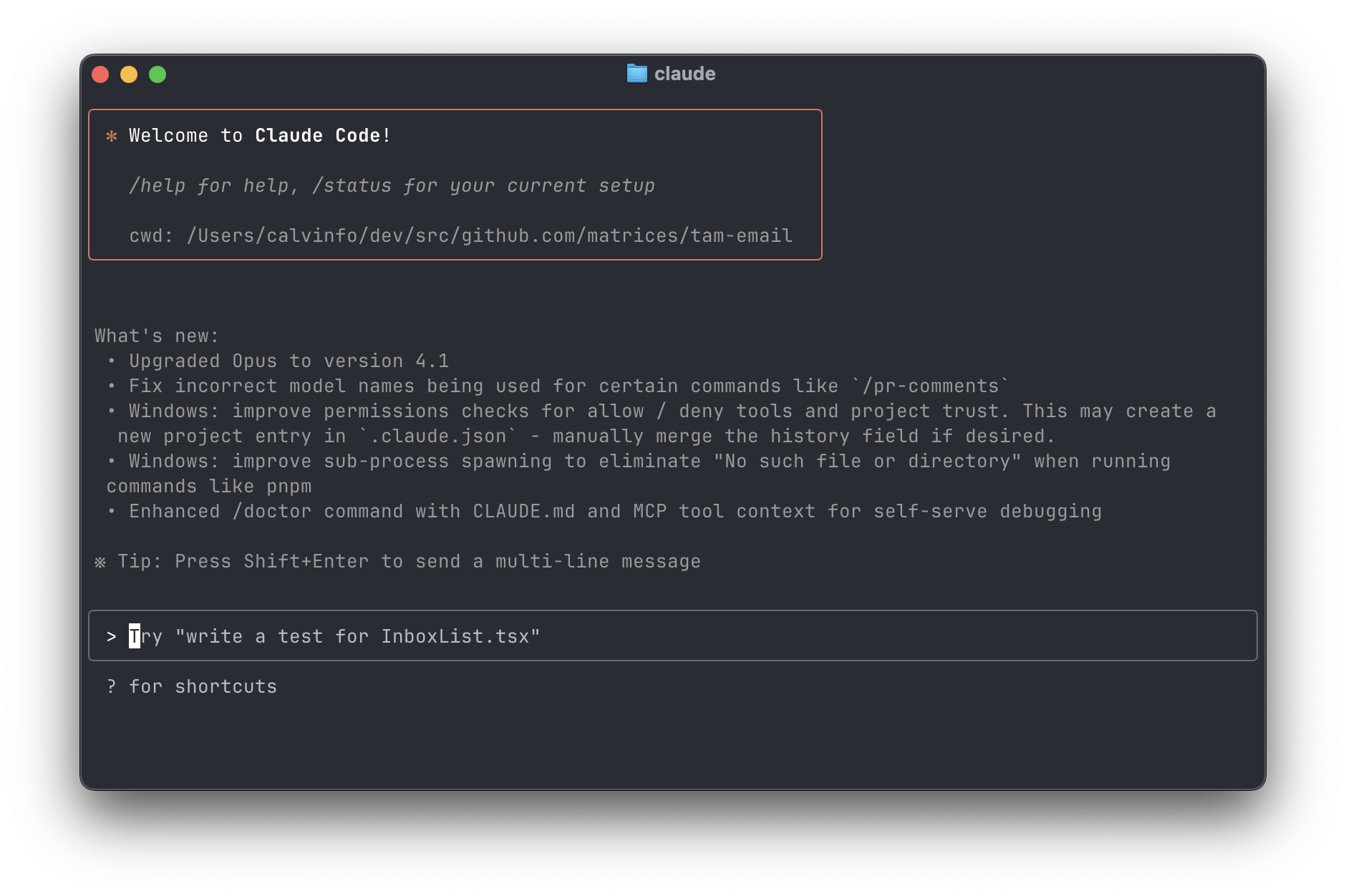

Here's the first thing I see when I launch Claude Code.

Unlike your average CLI tool, it actually has... UI design?

Small accent colors make the UI playful and interesting. The three shades of text color draw your eye to specific sections (header > input box > changelog). Animated text subtly changes color. There are tasteful unicode icons that feel vaguely reminiscent of the Anthropic logo. There's a fun retro aesthetic. And I'm greeted with a clear call to action: Try "write a test for InboxList.tsx".

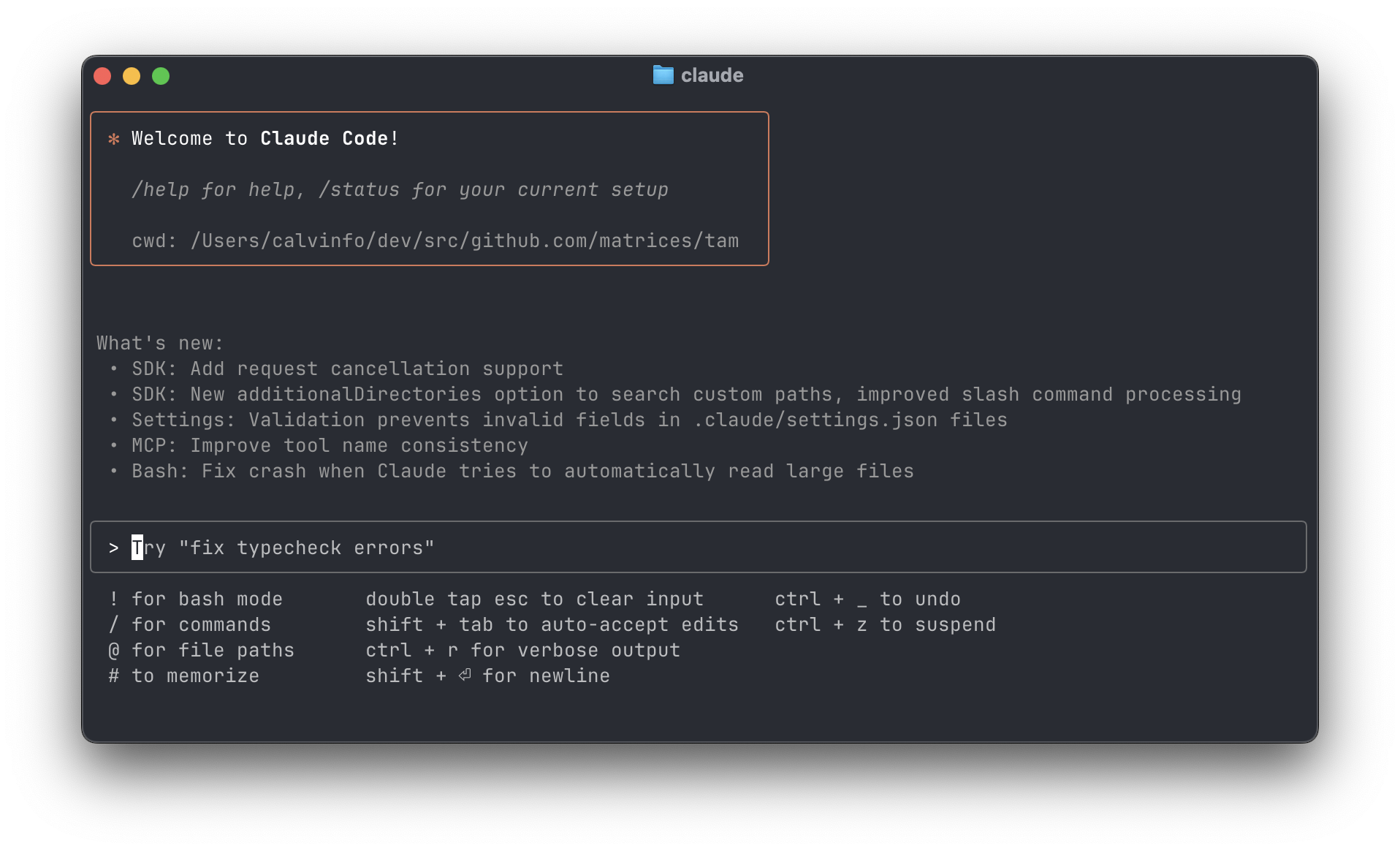

The very first screen also tells me how to get more from the tool. It gives me a changelog, a prompt, and a tip of the day. Expanding the shortcut menu shows me how to use the advanced features.

When making a choice, Claude Code gives me a handful of options for things like follow-ups.

I can select option 1, 2, or 3 just like I would in an RPG. The hotkeys all work the way I'd expect.

Claude Code is also partially defined by what it is not–it's not an IDE.

This is important for two big reasons:

- It feels lightweight. I'm not signing up to learn an entirely new editor. I'm just running a tool in my terminal.

- It is unique and differentiated. Authoring code outside an IDE feels like a weird new paradigm–but so is writing AI-generated code. Rather than compete with IDEs on their terms, a CLI tool embraces a different paradigm.

The resulting product feels easy to try and also has the freedom to optimize for a future where humans aren't the ones writing code.

Claude Code can devote more screen real estate to things like subagents or hooks because I don't expect to use it to view my folder tree or open files.

Building trust

After onboarding users, the biggest hurdle for any agentic tool is building trust. Users need to answer questions like: "What complexity of tasks can the model one-shot?" and "How should I verify the outputs without needing to examine every single line?"

Vibe-coding apps often struggle with building trust. On the one hand, the user can instantly verify that the behavior works by clicking through the app (great!). On the other, the surface area makes it harder to inspect what is going on when things do go wrong.

When we were building the cloud version of Codex at OpenAI, we didn't want the user checking in on the model and trying to "steer" it.

This tends to be good from a correctness perspective: the model is surprisingly adept at failure recovery, and giving the agent a "longer leash" to execute code dramatically improves performance. But it doesn't do as much to instill confidence in the user that the model is really doing the right thing.

We knew we needed some solution here, so we showed users the terminal commands that the model was executing in its own environment. This felt like a nice compromise, in addition to having the model output the results of tests and lint steps.

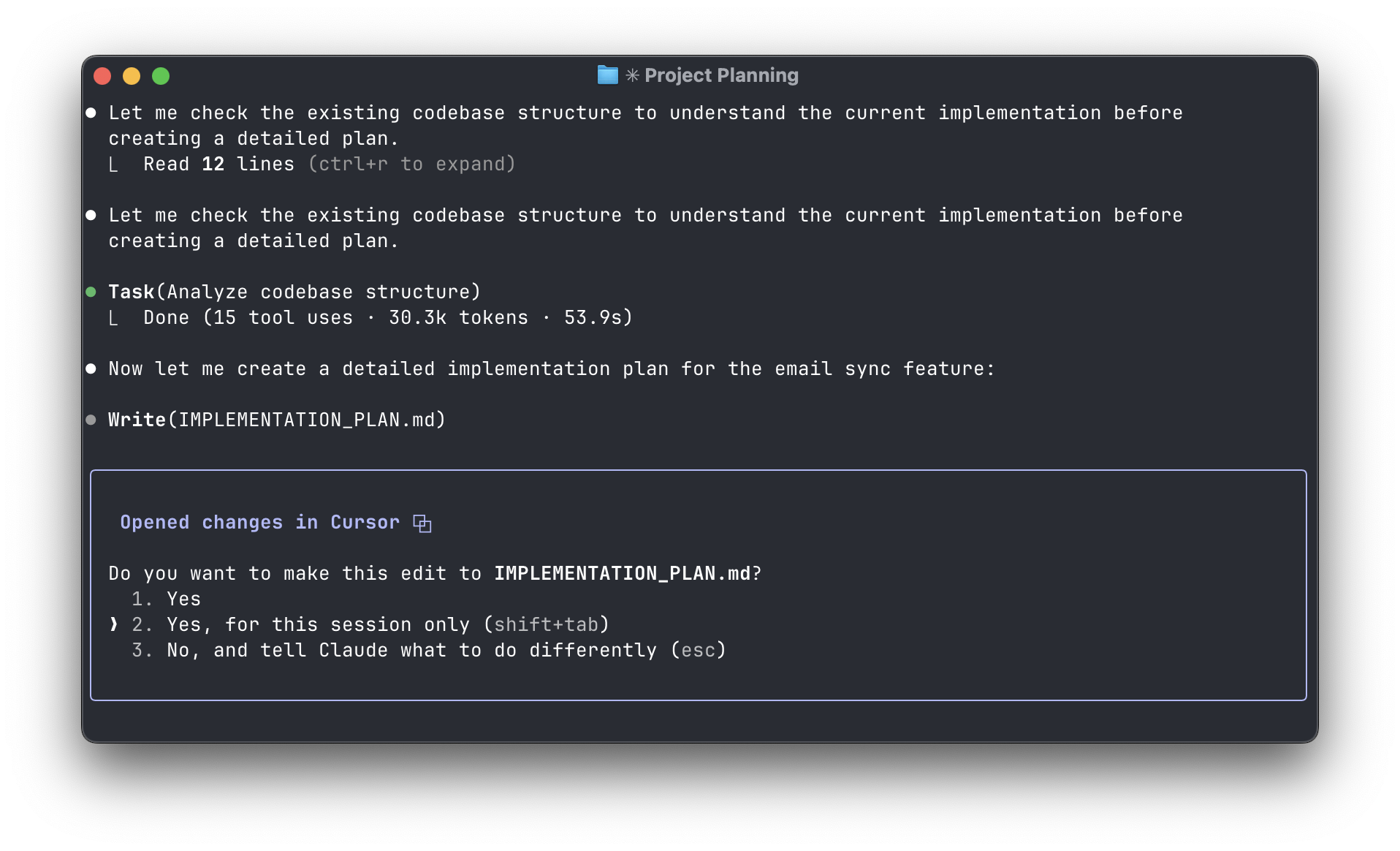

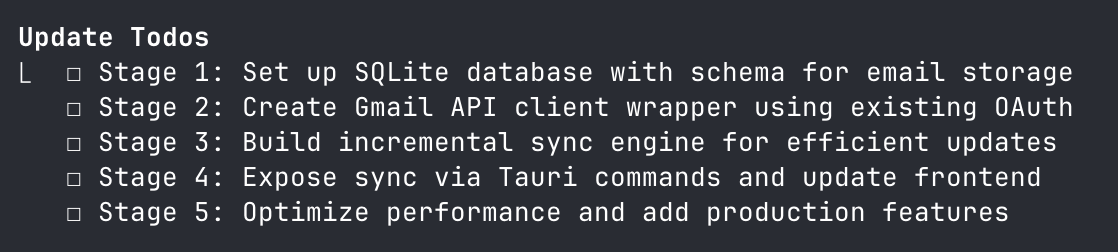

The one problem is that terminal commands are far less intuitive than TODO lists—completed TODOs are straightforward, but sed commands... less so.

I'm not sure whether leveraging the TODO list tool actually improves eval scores or is just a courtesy to the user. But it certainly helps the person in front of the keyboard understand what's going on.

There's also the question of context management. In an IDE, it's less clear which files and tabs are being pulled into the context window. Creating a new chat might erase the model's prior thinking, but will it still include the file you're looking at?

Claude Code makes this easier to reason about: everything invoked within the terminal session is included in the context. If Claude Code is compacting the context window, it will tell you that. If you want to run a compaction manually, the docs explain how.

Speed and momentum

A large part of what contributes to that feeling of flow is Claude Code's speed.

Sampling from the models feels fast. When inspecting the network traffic of the CLI, I see it switching between all three models (Haiku, Sonnet, Opus) depending on what the use case is.

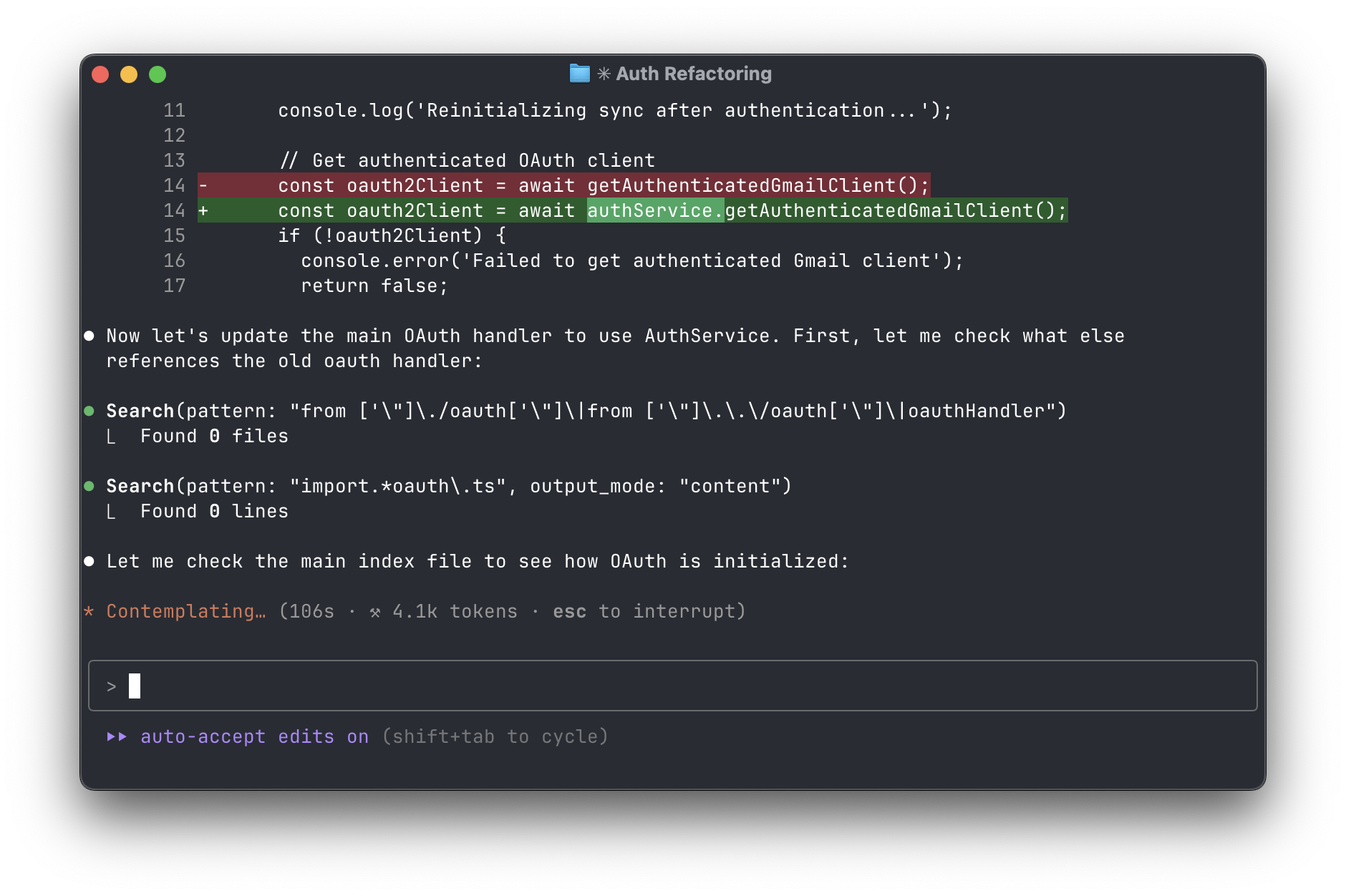

Looking carefully at the interface, I also notice a bunch of different small cues which tell me that Claude Code is doing something:

- animated icons and flavor text (Contemplating / Flibbertigibetting / Symbioting); they change as work progresses and the colors subtly 'pulse'

- a current runtime counter that counts up every second

- a token counter paired with an icon that switches between "upload", "download", and "working".

The sum total of all of it is that the interface feels responsive and alive. I know that work is happening as I use it.

An OpenAI friend of mine once remarked "I get stressed out when <INTERNAL_AGENT> is not running on my laptop".

In a time when new SOTA models appear every week and VCs are funding millions of GPUs, not using coding agents feels wasteful. It's like leaving free intelligence on the ground.

Claude Code taps deeply into this mentality. Rather than trying to obscure the number of tokens I'm using, it encourages me to maximize them. And if I pay for the "Claude Max" plan, the tokens I get feel unlimited, even if the per-token cost ends up being high.

The machine that builds the machine

This brings me to the last magic piece of Claude Code: the notion that through dedicated practice, I can learn to become 10x as productive.

When using Claude Code, there are effectively two parts of the loop I can optimize:

- core agent loop: building the core product I'm working on (adding new features, fixing bugs, etc)

- product harness: the tools, environment, memory, and prompts that the CLI uses to run the core agent loop

It turns out that optimizing the product harness is almost as much fun as actually building product.

All coding agents involve an element of gambling. When I submit a prompt, I never know where it's going to end up. I can keep spinning the wheel a bunch of times to see how it pans out.

But there's a key difference with Claude Code. Instead of just changing the way that I prompt the model, Claude Code encourages me to change the product harness itself.

That means that whenever Claude Code does the wrong thing, instead of blaming the tool, I find myself asking: "What could I have done better?" Could changes in the product harness give me a better result?

This "metagame" ends up being the #1 marketing tool for Claude Code, because it's something everyone does. It doesn't matter whether you write embedded Rust frameworks or Typescript frontends, you're going to be experimenting with how best to use all of the features of Claude Code.

Because users can "own" their product harness, everyone wants to show off their tips and tricks. My twitter feed is filled with Claude Code automations and best practices.

The product shape helps this too; CLI tools are naturally composable. It's easy to start imagining the possibilities: "Could I kick off tasks from a GitHub issue?", "Could I chain agents together? And have them invoke the CLI in series?", "Could I use git worktrees and run a bunch of sessions in parallel?" 3

The end result resembles an automation game, but when I'm done playing, I've brought something useful into the world.

A feeling of flow

A friend pointed out that what makes Claude Code fun isn't "gamification" in the traditional sense. There are no badges, levels, streaks, or anything like that.

Instead, it's the feeling of flow state and mastery that you get from learning to use the tool well.

There's a low barrier to entry–start by running a command and typing a prompt into the box. The product gradually reveals new ways of using the CLI through daily tips and suggestions. There's a sense of momentum, paired with clear, immediate feedback on what the agent is doing.

And most importantly, there are opportunities to improve your skill by using more advanced features and automations. Claude Code encourages you to keep honing that "product harness loop".

While coding is the perfect Petri dish for these sorts of ideas, these same techniques can be applied to other domains. At the end of the day, most knowledge work still involves critical thinking, resource allocation, and strategic planning. Perhaps enterprise software should borrow more ideas from Minecraft and fewer from 1950s-era accounting.

If coding is any indication, the tools that seem to be winning allow the user to hack their own workflows and optimize for that "feeling of flow".

My biggest takeaway is that it's not just the evals that matter–the product harness matters too.

Does all of this tweaking and customization actually make us more productive or is it just fun to do? There's probably some efficient frontier in between. And we'll need malleable tools to find it.

Footnotes

-

I'm discussing Claude Code in this post since it pioneered the agentic CLI concept. In my (admittedly biased) opinion of my former employer, the Codex CLI team is doing great work and is incorporating a bunch of these same ideas. They have shipped a ton of updates, so check it out if you haven't in a while (be sure to upgrade to the latest version!). Gemini also followed suit here as well, but I haven't had the chance to test it nearly as much. ↩

-

The cloud version, not the CLI. ↩

-

This was actually the "killer feature" bet we made with Codex. The fact that it operates asynchronously means that you can kick off as many tasks as you'd like in parallel, no worktrees required. In the fullness of time I think it will also be the way users want to interact with the models. But it does require some "re-tooling" on the user's side. ↩